Stay up-to-date with the latest Bitcoin prices! Our app provides real-time tracking and easy-to-use interface.

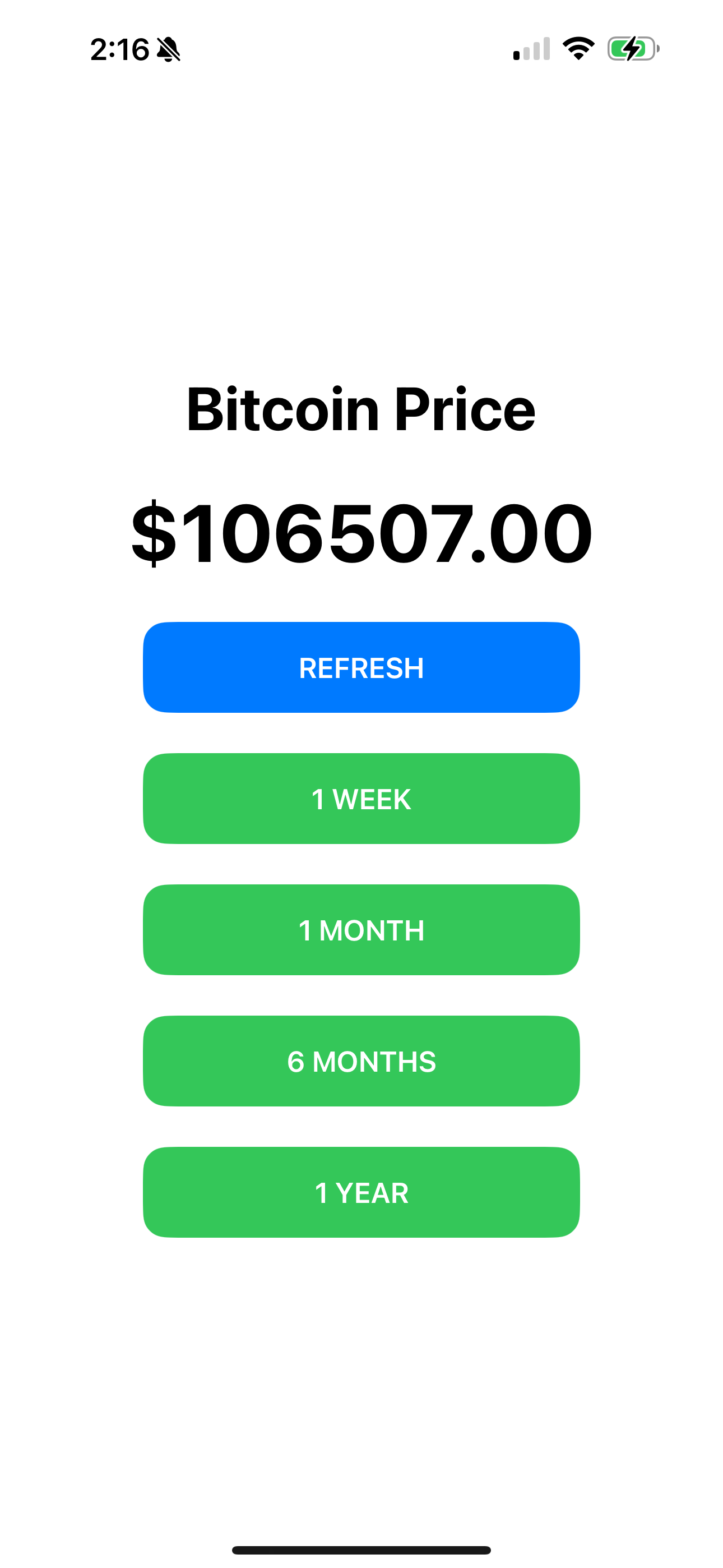

Get instant access to the current price of Bitcoin with our mobile app. Whether you’re a seasoned investor or just starting out, our app makes it easy to track the price of Bitcoin in real-time.

With its intuitive design and user-friendly interface, you’ll be able to quickly check the current price and stay informed about market trends. Our app is perfect for anyone looking to invest in Bitcoin or simply want to stay up-to-date with the latest prices.

Key Features:

- Real-time tracking of Bitcoin prices

- Easy-to-use interface for quick access to current prices

- Perfect for investors, traders, or anyone interested in staying informed about Bitcoin market trends

Download our app today and start tracking the price of Bitcoin on-the-go!